작은 메모장

4. 파일 입출력, 메일 자동화, 웹 서비스 크롤링 본문

저번 파일 입출력에서 계속

<사용한 라이브러리>

pip install python-dotenv

import os

import time

DIR_WATCH = "static"

previous_files = set(os.listdir(DIR_WATCH))

# WatchDogs

while True:

time.sleep(1)

print("Watching..")

current_files = set(os.listdir(DIR_WATCH))

new_files = current_files - previous_files

# Update

for file in new_files:

print(f"+ Detected New File : {file}")

with open(f"{DIR_WATCH}/{file}", "r", encoding="utf-8") as f:

lines = f.readlines()

for line in lines:

# Check

if line.startswith("#") or line.startswith("//"):

print(f"!!Somthing Important Info in {file} content!!")

print(line)

previous_files = current_files파일 입출력 고급 연습

메일 자동화 서비스 구현해보기

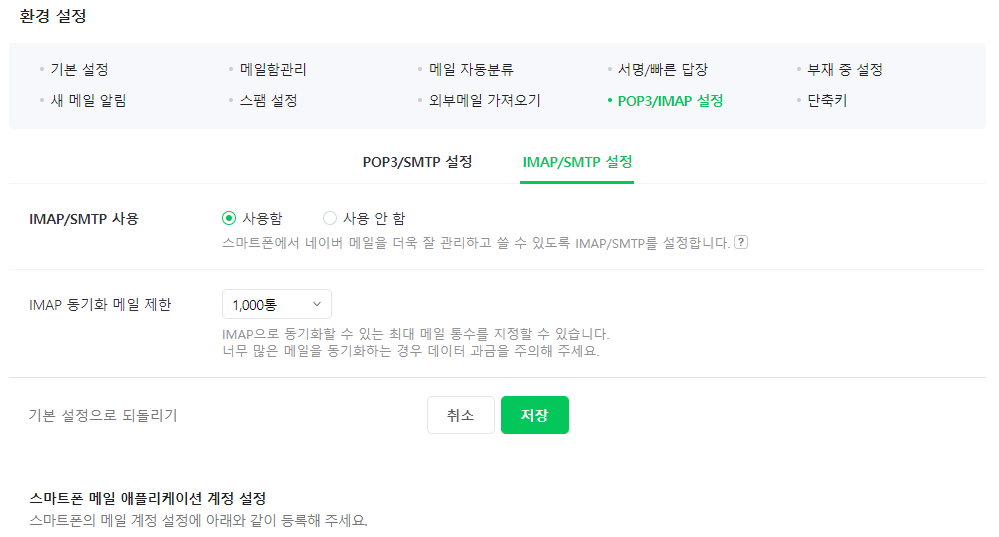

IMAP/SMTP 설정 해야함

2단계 인증은 잠시 해제

import smtplib

from email.header import Header

from email.mime.text import MIMEText

# Insert Your ID Here

SECRET_IP = "YOUR ID"

SECRET_PASS = "YOUR PASSWORD"

smtp = smtplib.SMTP("smtp.naver.com", 587)

smtp.ehlo()

smtp.starttls()

myemail = "YOUR EMAIL"

youremail = "TARGET EMAIL"

smtp.login(SECRET_IP, SECRET_PASS)

subject = "Subject Test"

message = "Message Test"

msg = MIMEText(message.encode("utf-8"), _subtype="plain", _charset="utf-8")

msg["Subject"] = Header(subject.encode("utf-8"), "utf-8")

msg["From"] = myemail

msg["To"] = youremail

smtp.sendmail(myemail, youremail, msg.as_string())

smtp.quit()smtp 통신 코드의 기본적인 구성은 다음과 같음

저걸 조금만 응용하면

import os

import time

import smtplib

from email.header import Header

from email.mime.text import MIMEText

DIR_WATCH = "static"

def mail_sender(content):

# Insert Your ID Here

SECRET_IP = "YOUR ID"

SECRET_PASS = "YOUR PASSWORD"

smtp = smtplib.SMTP("smtp.naver.com", 587)

smtp.ehlo()

smtp.starttls()

myemail = "YOUR EMAIL"

youremail = "TARGET EMAIL"

smtp.login(SECRET_IP, SECRET_PASS)

subject = "Detecting new Inportant Data"

message = f"Automatic catch Information : \n{content}"

msg = MIMEText(message.encode("utf-8"), _subtype="plain", _charset="utf-8")

msg["Subject"] = Header(subject.encode("utf-8"), "utf-8")

msg["From"] = myemail

msg["To"] = youremail

smtp.sendmail(myemail, youremail, msg.as_string())

smtp.quit()

#

previous_files = set(os.listdir(DIR_WATCH))

# WatchDogs

while True:

time.sleep(1)

print("Watching..")

current_files = set(os.listdir(DIR_WATCH))

new_files = current_files - previous_files

# Update

for file in new_files:

print(f"+ Detected New File : {file}")

with open(f"{DIR_WATCH}/{file}", "r", encoding="utf-8") as f:

targetcontent = ""

flag = False

lines = f.readlines()

for line in lines:

# Check

if line.startswith("#") or line.startswith("//"):

flag = True

targetcontent = targetcontent + line

if flag == True:

print("Mail Sent")

mail_sender(targetcontent)

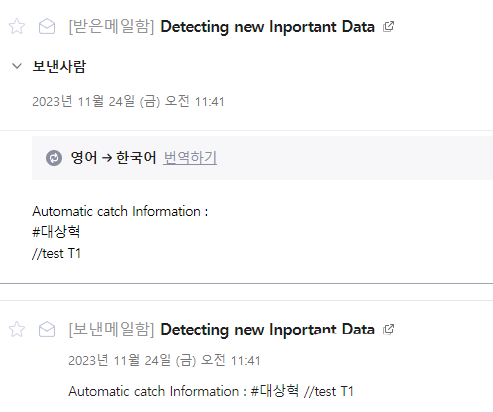

previous_files = current_files이런 코드를 만들 수 있음

결과도 잘 되는 것을 확인 가능

import os

import time

import smtplib

from dotenv import load_dotenv

from email.header import Header

from email.mime.text import MIMEText

from email.mime.multipart import MIMEMultipart

from email.mime.application import MIMEApplication

DIR_WATCH = "static"

def mail_sender(description):

# Insert Your ID Here

load_dotenv()

SECRET_ID = "YOUR ID"

SECRET_PASS = "YOUR PASSWORD"

smtp = smtplib.SMTP("smtp.naver.com", 587)

smtp.ehlo()

smtp.starttls()

smtp.login(SECRET_ID, SECRET_PASS)

myemail = "YOUR EMAIL"

youremail = "TARGET EMAIL"

msg = MIMEMultipart()

# Mail Header

subject = "Detecting new Important Data"

msg["Subject"] = Header(subject.encode("utf-8"), "utf-8")

msg["From"] = myemail

msg["To"] = youremail

message = f"Automatic catch Information : \n{description}"

contentPart = MIMEText(message)

msg.attach(contentPart)

# Mail Content

etc_file_path = r"detected_files.txt"

with open(etc_file_path, "rb") as f:

etc_content = MIMEApplication(f.read())

etc_content.add_header(

"Content-Disposition", "attachment", filename=etc_file_path

)

msg.attach(etc_content)

smtp.sendmail(myemail, youremail, msg.as_string())

smtp.quit()

detected_files = "detected_files.txt"

previous_files = set(os.listdir(DIR_WATCH))

""" WatchDogs """

while True:

time.sleep(1)

print("Watching..")

current_files = set(os.listdir(DIR_WATCH))

new_files = current_files - previous_files

# Update

for file in new_files:

print(f"+ Detected New File : {file}")

with open(f"{DIR_WATCH}/{file}", "r", encoding="utf-8") as f:

targetcontent = ""

flag = False # Trigger

lines = f.readlines()

for line in lines:

# Check

if line.startswith("#") or line.startswith("//"):

flag = True

targetcontent = targetcontent + line

if flag == True:

print("> Changes Saved")

with open(detected_files, "a", encoding="utf-8") as wf:

wf.write(

f"Detecting new Important Data\n{file} content : \n{targetcontent}\n"

)

print("> Mail Sent")

mail_sender(targetcontent)

previous_files = current_files궁극의 이메일 자동화 프로그램

크롤링이란?

크롤링(Crawling) 원하는 사이트 정보? 링크 정보들을 긁어 모으는 작업

웹 서비스 크롤링 - HTML, Javascript, css.. (클라이언트 사이드 스크립트)

API 를 통해 제공해주는 것은 사라지는 중

고객사의 웹 서비스 정보 내에 중요한 정보들이 포함 → 웹 취약점 스캐너 항목에 포함

보안이슈, 보안동향 등 주십 → 데이터를 정재화 → 고객 제공

<사용한 라이브러리>

pip install requests

pip install beautifulsoup4

pip install lxml

import requests

from bs4 import BeautifulSoup as bs

url = "https://www.mbn.co.kr/news/society/4914296"

headers = {"User-Agent": "Mozilla/5.0", "Content-Type": "text/html; charset=utf-8"}

req = requests.get(url, headers=headers)

soup = bs(req.text, "lxml")

links = soup.find_all("a")

for link in links:

if "href" in link.attrs:

href = link["href"]

print(f"{link.text}, link: {href}")크롤링 연습(find 사용)

import requests

from bs4 import BeautifulSoup as bs

url = "http://www.boannews.com/"

headers = {"User-Agent": "Mozilla/5.0", "Content-Type": "text/html; charset=utf-8"}

req = requests.get(url, headers=headers)

soup = bs(req.text, "lxml")

tags = soup.select("#headline2 > ul > li > p")

for tag in tags:

print(f"- {tag.string}")크롤링 연습 (select 사용)

import requests

from bs4 import BeautifulSoup as bs

target_url = "https://www.malware-traffic-analysis.net/2023/"

headers = {"User-Agent": "Mozilla/5.0", "Content-Type": "text/html; charset=utf-8"}

req = requests.get(target_url + "index.html", headers=headers)

soup = bs(req.text, "lxml")

"""

# Main content : .content > ul

# Indivisual content : [.content > ul] > li > .main_menu

# title : [.content > ul > li > .main_menu] > a

# link : [.content > ul > li > .main_menu] > a.href

"""

origin_contents = soup.select(".content > ul > li > .main_menu")

for content in origin_contents:

title = content.string

link = target_url + content["href"]

with open("crawl_datas.txt", "a", encoding="utf-8") as f:

f.write(f"@ ISSUES : {title}\n")

f.write(f"link HERE >> {link}\n\n")크롤링 실습(이슈 자동 가져오기)

엑셀 제작

import openpyxl

from faker import Faker

workbook = openpyxl.Workbook()

worksheet = workbook.active

worksheet["A1"] = "name"

worksheet["B1"] = "email"

worksheet["C1"] = "phone_number"

fake = Faker("ko_KR")

for row in range(2, 50):

worksheet.cell(row=row, column=1, value=fake.name())

worksheet.cell(row=row, column=2, value=fake.email())

worksheet.cell(row=row, column=3, value=fake.phone_number())

workbook.save("member.xlsx")엑셀 제작 연습

'실더스 루키즈 교육' 카테고리의 다른 글

| 6. 파일 속성 리스트와 중요 정보 탐지 (0) | 2023.11.28 |

|---|---|

| 5. RSS, FTP, API를 이용한 서비스 자동화 (0) | 2023.11.27 |

| 3. 인프라 활용을 위한 파이썬 (0) | 2023.11.23 |

| 2. Cloud DataCenter 개론 (0) | 2023.11.22 |

| 1. Git/Github란? (0) | 2023.11.21 |